In a remarkable achievement, South Korean scientists have unveiled a semiconductor chip that imitates the way the human brain learns — marking a major step forward in artificial intelligence hardware. Notably, a team from the Korea Advanced Institute of Science and Technology (KAIST) has developed a neuromorphic-computing chip capable of performing learning tasks with far greater efficiency than traditional designs. This development suggests that the boundary between brain-inspired cognition and silicon-based computing is closing.

1. Understanding Neuromorphic Computing and Brain-Inspired Design

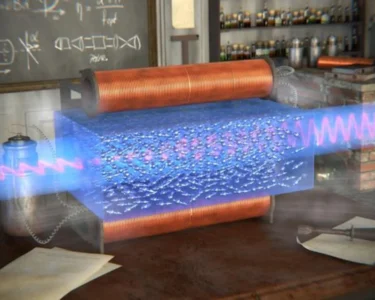

Firstly, to understand why this chip matters, we must explain neuromorphic computing. In contrast to conventional computing architectures that separate memory and processing units, neuromorphic systems integrate these functions more like a biological brain. For example, KAIST researchers describe their chip as mimicking “intrinsic plasticity”—the brain’s ability to adapt its own response characteristics.

Furthermore, the human brain operates in a massively parallel way, with neurons and synapses dynamically changing their strength based on exposure and experience. Neuromorphic chips aim to replicate this by using memristors or other artificial synaptic devices that can both store and process information.

Thus, this approach promises dramatic improvements in power efficiency, responsiveness, and real-time learning capability.

2. What the Korean Research Achieved

Moving ahead, the KAIST team led by Professor Yoo Hoi‑jun announced that they created what they call a “complementary-transformer” AI chip built via a 28-nm manufacturing process, leveraging neuromorphic computing principles. The Korea Times+1

They demonstrated that the chip could run certain large-language-model (LLM) tasks (such as summarization, translation and Q&A) offline, and with far less power consumption than conventional GPU-based systems. Korea Joongang Daily+1

Notably, their chip operated in a space of just 4.5 mm × 4.5 mm, required only 0.4 seconds for certain LLM tasks, and consumed only about 400 mW of power—compared to around 250 W for a typical high-end GPU. Korea Joongang Daily+1

In short, they demonstrated that this brain-inspired chip is not just theoretical—it works, and it works with efficiency.

3. Why This Matters for Learning & Adaptation

Importantly, this achievement is not just about faster computing—it’s about learning and adaptation. The chip is designed so that it can adjust synaptic weights and neuron-like behaviours internally, akin to how our brain rewires itself when learning. english.etnews.com+1

For example, the chip demonstrates intrinsic plasticity: the ability to change its response based on past stimuli without explicit re-programming. 아시아경제+1

Moreover, learning on-device—rather than relying on cloud-based servers—means the chip can adapt in real time, handle new inputs immediately, and do so with less latency and lower power. This opens doors for smart sensors, autonomous devices, and edge computing systems that must learn in situ.

4. Implications for Edge Computing & Real-World Applications

Consequently, the implications are far-reaching. Because the chip is so small, efficient and capable of on-device learning, it could empower many applications that previously weren’t feasible. For example:

- Smart cameras or security systems that learn to recognise behaviours or anomalies locally without cloud connectivity.

- Wearables or IoT devices that adapt to user habits, context or environment without draining batteries.

- Autonomous robots or vehicles that must respond and learn on the fly with limited power budgets.

Finally, since the chip can process LLM-type tasks on device, language translation, voice assistants, or personalised AI features can be embedded in devices rather than relying fully on cloud infrastructure.

5. Challenges and Considerations

Nevertheless, there are important caveats. First, while these chips demonstrate learning capability, generalising to all types of AI tasks and achieving the versatility of the human brain is still far off. Researchers emphasise this is a foundational technology, not the finished product. The Korea Times

Second, manufacturing such neuromorphic chips at scale, integrating them into commercial devices, ensuring reliability, and managing cost remain non-trivial hurdles. Third, software ecosystems (algorithms, frameworks) must adapt to the new hardware paradigm—learning rules, spiking neural behaviours, neuromorphic architectures differ from conventional neural networks.

In conclusion, while the excitement is justified, we must temper expectations about immediate mass deployment.

6. How the Korean Innovation Compares Globally

Interestingly, this development from Korea fits into a global push toward neuromorphic computing. For instance, other teams have reported memristor-based devices or chips with integrated memory-and-compute functions. CM Asiae+1

What sets the Korean work apart is the integration of large-language-model functionality (via the transformer design) and the high degree of miniaturisation and power efficiency. Accordingly, the Korean team is among the first to bring LLM-style workloads under the neuromorphic umbrella with real efficiency gains.

Thus, their innovation may set a benchmark for next-generation AI hardware.

7. The Future of Brain-Inspired Chips and Learning Machines

Looking ahead, the impact of brain-inspired chips could be far-reaching. As devices become smarter, more autonomous, and able to learn on-their-own, our interaction with technology may shift from commands to collaborative adaptation. In fact, future smart systems might continuously learn, refine, and optimise themselves.

Moreover, as power constraints and latency become ever more critical (especially in edge, mobile, AR/VR, robotics), neuromorphic hardware could become a cornerstone for sustainable AI. Eventually, we might see systems that not only process data, but think, adapt, and grow in the field—much as our brains do.

Finally, we should consider ethical and societal implications: smarter devices mean more autonomy, more local data processing, less reliance on central servers—but also raise questions of privacy, control, and transparency.

Conclusion

In summary, the Korean-led research into brain-inspired neuromorphic chips marks a major milestone in AI hardware. By mimicking how the human brain learns—via plasticity, integration of synapse-and-neuron functions, and on-device adaptation—these chips promise to bring powerful learning capabilities into everyday devices. Although many challenges remain, the path toward truly intelligent machines that learn as humans do has never been clearer.

Let me know if you’d like a shorter summary, infographic, or a deeper dive into the technical aspects of how the learning rules (like spike-timing-dependent plasticity) are implemented!